Terrafrom 建立private cluster

前言

要重建一個新的project,然後把舊的服務搬過去。

初步估計就一堆東西,正好拿來練一下terraform了

正文

| 服務列表 |

|---|

| Create Project |

| Create Cluster |

| Create VPC |

| Create CLoudSQL |

| Create Memorystore |

| Create CloudStorage |

| Copy file to CloudStorage |

| 後端修改config&publish |

| Creaet RabbitMQ |

| Create Nginx ingress |

| 前端服務部署&測試 |

| 後端服務部署&測試 |

| Network policy |

| Obserbility service |

| EFK logs |

| 前後端 CI/CD 修改 & IAM權限 |

Project沒辦法用terraform,因爲那不是我操作的。

也不知道那個能不能用terraform 。

這篇會建立一個bucket、vpc 、private cluster以及cloud NAT。

下面會用tf 來稱呼terraform

- 建立bucket

因爲狀態關係,就乾脆用gcs統一狀態了,可參考148. Terraform的基本概念

new\gcs\main.tf

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "4.63.1"

}

}

}

provider "google" {

project = "ezio-sms-o"

}

resource "random_id" "bucket_prefix" {

byte_length = 3

}

resource "google_storage_bucket" "tfstate_bucket" {

name = "${random_id.bucket_prefix.hex}-terraform-devops"

force_destroy = false

location = "asia-east1"

storage_class = "Nearline"

//物件版本管理

versioning {

enabled = false

}

}

output "bucket_name"{

description = "get bucket name"

value = google_storage_bucket.tfstate_bucket.name

}

記下bucket name與預計要用的前綴,然後寫在backend.hcl,

等等下面會用到。

new\backend.hcl

bucket = "cb0392-terraform-devops"

prefix = "terraform/state"

- 建vpc 與cloudNAT

new\gke\main.tf

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "5.4.0"

}

}

backend "gcs" {

}

}

provider "google" {

project = "ezio-sms-o"

zone = "asia-east1-b"

}

# VPC

resource "google_compute_network" "basic" {

name = "basic"

auto_create_subnetworks = false

# delete_default_routes_on_create = false

routing_mode = "REGIONAL"

}

resource "google_compute_subnetwork" "basic" {

name = "basic-subnet"

ip_cidr_range = var.subnet_cidr

region = var.gcp_region

network = google_compute_network.basic.id

private_ip_google_access = true

secondary_ip_range {

range_name = "gke-pod-range"

ip_cidr_range = var.pod_cidr

}

secondary_ip_range {

range_name = "gke-service-range"

ip_cidr_range = var.service_cidr

}

}

# Cloud NAT

resource "google_compute_router" "default_cloudnat" {

name = "default-nat"

region = var.gcp_region

network = google_compute_network.basic.id

}

resource "google_compute_router_nat" "default_cloudnat" {

name = "nat"

router = google_compute_router.default_cloudnat.name

region = var.gcp_region

source_subnetwork_ip_ranges_to_nat = "LIST_OF_SUBNETWORKS"

nat_ip_allocate_option = "MANUAL_ONLY"

subnetwork {

name = google_compute_subnetwork.basic.id

source_ip_ranges_to_nat = ["ALL_IP_RANGES"]

}

nat_ips = [google_compute_address.nat.self_link]

}

resource "google_compute_address" "nat" {

name = "nat"

address_type = "EXTERNAL"

network_tier = "PREMIUM"

region = var.gcp_region

}

變數的設定,也請參考148. Terraform的基本概念

new\gke\terraform.tf

variable "project_id" {

default = "ezio-sms-o"

}

variable "gcp_region" {

default = "asia-east1"

}

variable "gcp_zone" {

type = list(string)

default = ["asia-east1-b"]

}

variable "gke_name" {

default = "fixed"

}

variable "subnet_cidr" {

default = "10.10.0.0/16"

}

variable "pod_cidr" {

default = "192.168.8.0/21"

}

variable "service_cidr" {

default = "192.168.16.0/21"

}

variable "master_cidr" {

default = "10.0.2.0/28"

}

ref.

- 建立GKE

這部分算是查最久的,用到了terraform的module概念。

可以先看這篇 [Terraform] 入門學習筆記,瞭解一下什麼是module。

然後再來就是看官方文件了。

new\gke\main.tf

沒錯跟上面vpc的檔案一樣,因爲是同一份文件。

# GKE

module "gke" {

source = "terraform-google-modules/kubernetes-engine/google//modules/private-cluster"

version = "29.0.0"

project_id = var.project_id

name = var.gke_name

# 區域性

regional = false

region = var.gcp_region

zones = var.gcp_zone

network = google_compute_network.basic.name

subnetwork = google_compute_subnetwork.basic.name

ip_range_pods = google_compute_subnetwork.basic.secondary_ip_range[0].range_name

ip_range_services = google_compute_subnetwork.basic.secondary_ip_range[1].range_name

http_load_balancing = true

network_policy = false

horizontal_pod_autoscaling = true

filestore_csi_driver = false

# private cluster

enable_private_endpoint = false

enable_private_nodes = true

master_global_access_enabled = false

master_ipv4_cidr_block = "10.0.0.0/28"

# gke version

release_channel = "UNSPECIFIED"

remove_default_node_pool = true

# log record

logging_enabled_components = ["SYSTEM_COMPONENTS"]

create_service_account = false

node_pools = [

{

autoscaling = false

node_count = 2

name = "default-node-pool"

machine_type = "e2-custom-4-12288"

local_ssd_count = 0

spot = false

disk_size_gb = 100

disk_type = "pd-standard"

image_type = "COS_CONTAINERD"

enable_gcfs = false

enable_gvnic = false

logging_variant = "DEFAULT"

auto_repair = true

auto_upgrade = false

preemptible = false

initial_node_count = 80

},

]

node_pools_oauth_scopes = {

all = [

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

"https://www.googleapis.com/auth/devstorage.read_only",

"https://www.googleapis.com/auth/service.management.readonly",

"https://www.googleapis.com/auth/servicecontrol",

"https://www.googleapis.com/auth/trace.append"

]

}

node_pools_labels = {

all = {}

default-node-pool = {

default-node-pool = true

}

}

node_pools_metadata = {

all = {}

default-node-pool = {

node-pool-metadata-custom-value = "fixed-node-pool"

}

}

node_pools_taints = {

all = []

default-node-pool = [

{

key = "default-node-pool"

value = true

effect = "PREFER_NO_SCHEDULE"

},

]

}

node_pools_tags = {

all = []

default-node-pool = [

"default-node-pool",

]

}

}

簡單說一下module的用法,

當第一次用module時,需要初始化,加上指定gcs儲存tfstat

tf init -backend-config=../backend.hcl

之後要使用submodule的話,不用再初始化,只要get

tf get

要建立private cluster的話,需要使用submodule,

可從kubernetes-engine 到 submodule的 private-cluster,

有些參數不知道是建立GKE的哪個設定,

可以多利用tf validate 驗證,

但有時是部署時才會發生錯誤。

我後來是直接手動把cluster砍掉,再tf apply比較快。

本來也有想過不要用module,直接實作。

但看了官方文件 Using GKE with Terraform,

Additionally, you may consider using Google's

kubernetes-enginemodule, which implements many of these practices for you

就試試看了。

注意,submodule與主要module的source不一樣,有些參數在主module是沒有的。

Troubleshooting

部署GKE時,發現我沒辦法從Google Artifact Registry 下載image。

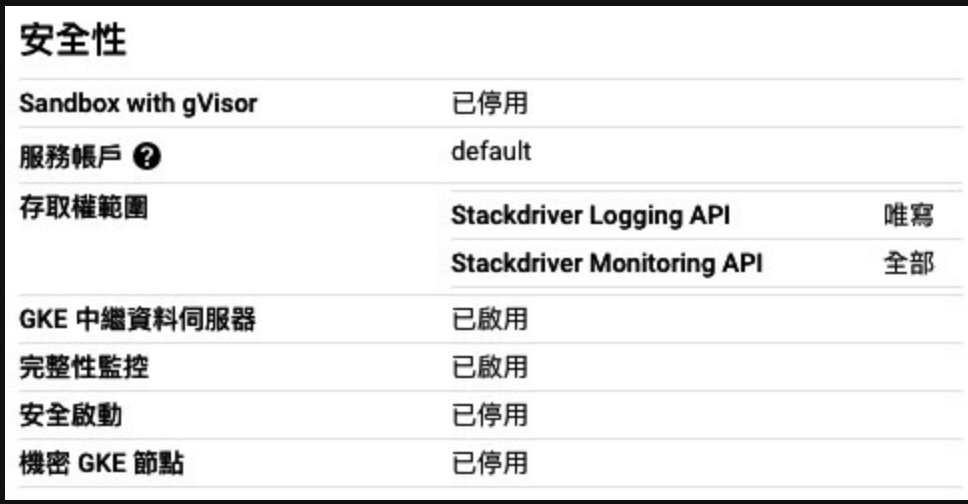

最後發現是 沒有設定 存取權範圍,Access scopes in GKE。

這個需要在 node_pools_oauth_scopes 裏面補上允許的api網址。